HPC in Germany - Who? What? Where?

Rechenzentrum

155.00 TiB (Main Memory)

256 Nodes , 38,848 CPU Cores (AMD, Intel)

52 GPGPUs (AMD, Nvidia)

Fachgruppe Physik

Center for Scientific Computing

19.340 TFlop/s (Peak Performance) , 55.00 TiB (Main Memory)

110 Nodes , 7,040 CPU Cores (AMD)

880 GPGPUs (AMD)

Deutscher Wetterdienst

16.578 TFlop/s (Peak Performance) , 319.00 TiB (Main Memory)

645 Nodes , 54,400 CPU Cores (NEC)

12.775 TFlop/s (Peak Performance) , 246.00 TiB (Main Memory)

497 Nodes , 41,920 CPU Cores (NEC)

Deutsches Elektronen Synchrotron

2.321 TFlop/s (Peak Performance) , 393.00 TiB (Main Memory)

764 Nodes , 26,668 CPU Cores (Intel, AMD)

282 GPGPUs (Nvidia)

Deutsches Klimarechenzentrum GmbH

16.600 TFlop/s (Peak Performance) , 863.00 TiB (Main Memory)

3,042 Nodes , 389,376 CPU Cores (AMD)

240 GPGPUs (Nvidia)

ZDV

543 TFlop/s (Peak Performance) , 41.00 TiB (Main Memory)

302 Nodes , 6,120 CPU Cores (Intel)

124 GPGPUs (Nvidia)

Competence Center High Performance Computing (CC-HPC)

67 TFlop/s (Peak Performance) , 14.00 TiB (Main Memory)

198 Nodes (Unknown FDR-Infiniband) , 3,224 CPU Cores (Intel)

2 GPGPUs (Nvidia)

35 TFlop/s (Peak Performance) , 6.00 TiB (Main Memory)

90 Nodes (Unknown FDR-Infiniband, QDR-Infiniband) , 1,584 CPU Cores (Intel)

3 GPGPUs (Nvidia) , 2 Many Core Processors (Intel)

Gesellschaft für wissenschaftliche Datenverarbeitung mbH Göttingen

2.883 TFlop/s (Peak Performance) , 90.00 TiB (Main Memory)

402 Nodes (Unknown QDR-Infiniband, FDR-Infiniband, Intel Omnipath, FDR-Infiniband) , 16,640 CPU Cores (Intel, AMD)

278 GPGPUs (Nvidia) , 302 Applications (454 Versions)

8.261 TFlop/s (Peak Performance) , 487.00 TiB (Main Memory)

1,473 Nodes (Intel Omnipath) , 116,152 CPU Cores (Intel)

12 GPGPUs (Nvidia)

14.760 TFlop/s (Peak Performance) , 74.00 TiB (Main Memory)

126 Nodes , 8,632 CPU Cores (Intel, AMD)

504 GPGPUs (Nvidia)

Zentrum für Informations- und Medientechnologie

64.00 TiB (Main Memory)

212 Nodes , 6,820 CPU Cores (AMD, Intel, Alpha Data)

230 GPGPUs (Nvidia)

Department of Information Services and Computing

6.800 TFlop/s (Peak Performance) , 255.00 TiB (Main Memory)

357 Nodes , 29,776 CPU Cores (Intel, AMD)

268 GPGPUs (Nvidia) , 2 FPGAs (AMD XILINX)

hessian.AI

8.830 TFlop/s (Peak Performance) , 159.00 TiB (Main Memory)

81 Nodes , 2,784 CPU Cores (AMD)

632 GPGPUs (Nvidia)

Hochschulrechenzentrum

8.500 TFlop/s (Peak Performance) , 553.00 TiB (Main Memory)

1,231 Nodes ( HDR100-Infiniband, Mellanox HDR100-Infiniband) , 123,072 CPU Cores (Intel, AMD)

100 GPGPUs (Nvidia, Intel, AMD)

High-Performance Computing Center Stuttgart

120.00 TiB (Main Memory)

476 Nodes , 14,480 CPU Cores (Intel, NEC)

16 GPGPUs (AMD, Nvidia)

48.100 TFlop/s (Peak Performance) , 286.00 TiB (Main Memory)

444 Nodes , 34,432 CPU Cores (AMD)

752 GPGPUs (AMD)

IT Center of RWTH Aachen University

11.484 TFlop/s (Peak Performance) , 226.00 TiB (Main Memory)

684 Nodes , 65,664 CPU Cores (Intel)

208 GPGPUs (Nvidia)

Jülich Supercomputing Centre (JSC)

85.000 TFlop/s (Peak Performance) , 749.00 TiB (Main Memory)

3,515 Nodes (Mellanox EDR-Infiniband, HDR200-Infiniband) , 168,208 CPU Cores (Intel, AMD)

3956 GPGPUs (Nvidia)

18.520 TFlop/s (Peak Performance) , 444.00 TiB (Main Memory)

780 Nodes (Mellanox HDR100-Infiniband) , 99,840 CPU Cores (AMD)

768 GPGPUs (Nvidia)

930.000 TFlop/s (Peak Performance) , 703.00 TiB (Main Memory)

6,000 Nodes , 1,728,000 CPU Cores (Nvidia)

24000 GPGPUs (Nvidia)

Zuse-Institut Berlin

10.707 TFlop/s (Peak Performance) , 677.00 TiB (Main Memory)

1,192 Nodes (Intel Omnipath, NDR-Infiniband) , 113,424 CPU Cores (Intel, AMD)

42 GPGPUs (Nvidia)

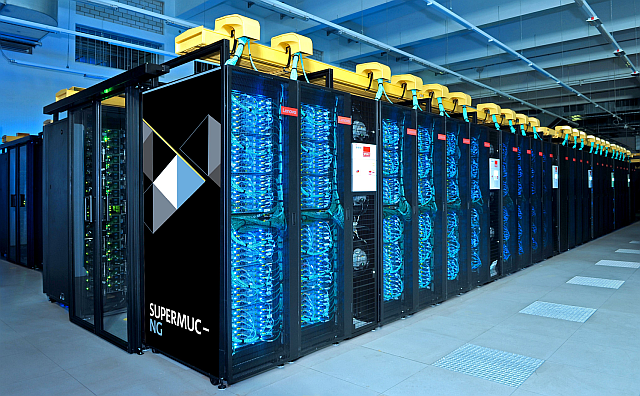

Leibniz-Rechenzentrum der Bayerischen Akademie der Wissenschaften

54.860 TFlop/s (Peak Performance) , 822.00 TiB (Main Memory)

6,720 Nodes (Intel Omnipath, Mellanox HDR200-Infiniband) , 337,920 CPU Cores (Intel)

960 GPGPUs (Intel)

Max Planck Computing & Data Facility

24.800 TFlop/s (Peak Performance) , 517.00 TiB (Main Memory)

1,784 Nodes , 128,448 CPU Cores (Intel)

768 GPGPUs (Nvidia)

4.900 TFlop/s (Peak Performance) , 445.00 TiB (Main Memory)

768 Nodes , 98,304 CPU Cores (AMD)

36.000 TFlop/s (Peak Performance) , 75.00 TiB (Main Memory)

300 Nodes , 14,400 CPU Cores (AMD)

600 GPGPUs (AMD)

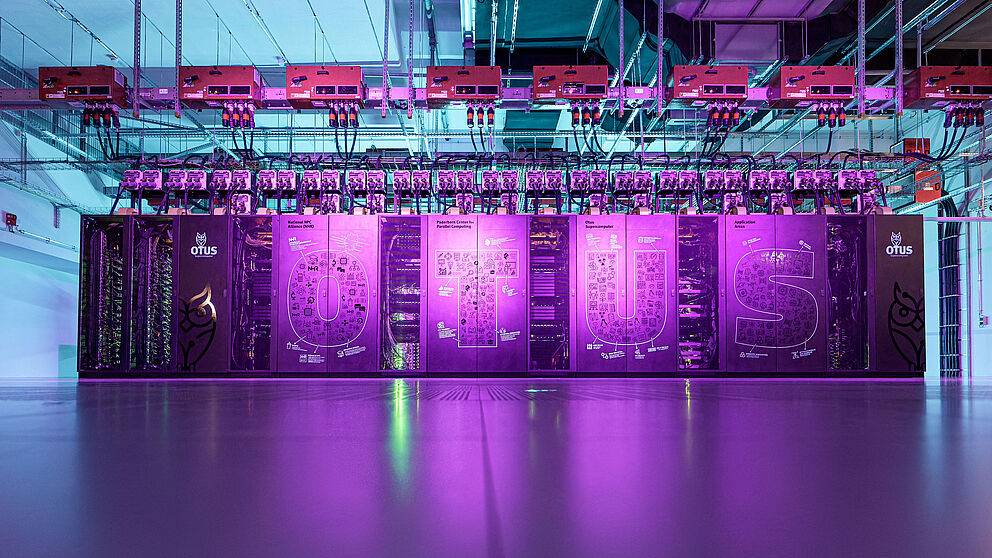

Paderborn Center for Parallel Computing

835 TFlop/s (Peak Performance) , 51.00 TiB (Main Memory)

274 Nodes (Intel Omnipath) , 10,960 CPU Cores (Intel)

18 GPGPUs (Nvidia)

7.100 TFlop/s (Peak Performance) , 347.00 TiB (Main Memory)

1,121 Nodes (Mellanox HDR100-Infiniband) , 143,488 CPU Cores (AMD)

136 GPGPUs (Nvidia) , 80 FPGAs (Bittware, AMD XILINX)

18.900 TFlop/s (Peak Performance) , 593.00 TiB (Main Memory)

743 Nodes (Mellanox NDR-Infiniband) , 142,656 CPU Cores (AMD)

108 GPGPUs (Nvidia) , 32 FPGAs (AMD XILINX)

Regionales Hochschulrechenzentrum Kaiserslautern-Landau (RHRZ)

3.072 TFlop/s (Peak Performance) , 52.00 TiB (Main Memory)

489 Nodes (Unknown QDR-Infiniband, Intel Omnipath) , 10,520 CPU Cores (Intel, AMD)

56 GPGPUs (Nvidia) , 56 Applications (228 Versions)

489 TFlop/s (Peak Performance) , 68.00 TiB (Main Memory)

244 Nodes ( Omnipath) , 4,240 CPU Cores (AMD, Intel)

56 GPGPUs (Nvidia)

Hochschulrechenzentrum

IT.SERVICES

URZ

Scientific Computing Center

27.960 TFlop/s (Peak Performance) , 291.00 TiB (Main Memory)

799 Nodes ( HDR200-Infiniband) , 60,460 CPU Cores (Intel, AMD)

756 GPGPUs (Nvidia)

8.865 TFlop/s (Peak Performance) , 127.00 TiB (Main Memory)

393 Nodes (Hewlett Packard Enterprise (HPE) NDR-Infiniband, HDR200-Infiniband) , 27,632 CPU Cores (Intel, AMD)

188 GPGPUs (Nvidia, AMD)

Scientific Computing Center

IT und Medien Centrum

Universität Bielefeld – Fakultät Physik

Zentrum für Informations- und Mediendienste

5.500 TFlop/s (Peak Performance) , 177.00 TiB (Main Memory)

259 Nodes , 29,008 CPU Cores (Intel)

68 GPGPUs (Nvidia)

Regionales Rechenzentrum (RRZ)

2.640 TFlop/s (Peak Performance) , 147.00 TiB (Main Memory)

182 Nodes , 34,432 CPU Cores (AMD)

32 GPGPUs (Nvidia)

Zentrum für Informations- und Medientechnologie

Kommunikations- und Informationszentrum (kiz)

188.00 TiB (Main Memory)

692 Nodes , 33,216 CPU Cores (Intel)

28 GPGPUs (Nvidia) , 2 FPGAs (Bittware)

Zentrum für Informationsverarbeitung

Zentrum für Datenverarbeitung

3.125 TFlop/s (Peak Performance) , 190.00 TiB (Main Memory)

1,948 Nodes (Intel Omnipath) , 52,248 CPU Cores (Intel)

188 GPGPUs (Nvidia, NEC)

2.800 TFlop/s (Peak Performance) , 219.00 TiB (Main Memory)

600 Nodes ( HDR100-Infiniband) , 76,800 CPU Cores (AMD)

40 GPGPUs (Nvidia)

Center for Information Services and High Performance Computing

5.443 TFlop/s (Peak Performance) , 34.00 TiB (Main Memory)

34 Nodes ( HDR200-Infiniband) , 1,632 CPU Cores (AMD)

272 GPGPUs (Nvidia)

4.050 TFlop/s (Peak Performance) , 315.00 TiB (Main Memory)

630 Nodes ( HDR100-Infiniband) , 65,520 CPU Cores (Intel)

762 TFlop/s (Peak Performance) , 94.00 TiB (Main Memory)

188 Nodes ( HDR200-Infiniband) , 24,064 CPU Cores (AMD)

39.664 TFlop/s (Peak Performance) , 111.00 TiB (Main Memory)

148 Nodes ( HDR200-Infiniband) , 9,472 CPU Cores (AMD)

592 GPGPUs (Nvidia)

Erlangen National Center for High Performance Computing (NHR@FAU)

511 TFlop/s (Peak Performance) , 46.00 TiB (Main Memory)

728 Nodes (Intel Omnipath) , 14,560 CPU Cores (Intel)

21 Applications (61 Versions)

6.080 TFlop/s (Peak Performance) , 78.00 TiB (Main Memory)

82 Nodes (Mellanox HDR200-Infiniband, HDR200-Infiniband) , 10,496 CPU Cores (AMD)

656 GPGPUs (Nvidia)

5.450 TFlop/s (Peak Performance) , 248.00 TiB (Main Memory)

992 Nodes (Mellanox HDR100-Infiniband) , 71,424 CPU Cores (Intel)

51.920 TFlop/s (Peak Performance) , 144.00 TiB (Main Memory)

192 Nodes , 24,576 CPU Cores (AMD)

768 GPGPUs (Nvidia)